Motion Planning with Vision¶

In advanced robotic applications, integrating sensor data into motion planning is crucial for robot operation in unstructured environments. The Jacobi Motion and Vision libraries provide tools to incorporate visual data, such as depth images and point clouds, into the planning process. This tutorial will guide you through:

There are two primary methods to incorporate sensor data into collision checking during motion planning:

DepthMap: Efficient for environments where obstacles can be represented as surfaces with varying heights (e.g., a stack of boxes). Fast collision checking due to simplified representation. Recommended when working with camera data in applications such as palletizing or bin picking.

PointCloud: Suitable for complex 3D structures where obstacles cannot be represented as simple height variations. More computationally intensive than a DepthMap, but provides more accurate collision checking in complex environments. Recommended for complex environments and when a depth map is not available, e.g., due to unknown camera intrinsics or when data is obtained from a different sensor type.

Both methods allow the planner to consider real-world obstacles during motion planning, enhancing the robot’s ability to operate safely and efficiently.

First, define the Jacobi environment. For a more in-depth explanation of the environment setup, refer to the Environment tutorial.

from jacobi import Environment

environment = Environment(robot, safety_margin=0.01)

Working with Depth Maps¶

A DepthMap object represents the environment as a grid of depth values - corresponding to the orthographic projection of a depth image. You can create a DepthMap from a depth image obtained from a camera or from a file.

from jacobi_vision import DepthImage

from jacobi import DepthMap

# Load a depth image using your preferred method

depth_image = DepthImage.load_from_file('path/to/depth_image.npy')

depth_map = depth_image.to_depth_map(scale=1)

Then, create an obstacle from the DepthMap and add it to the environment.

from jacobi import Obstacle

depth_map_obstacle = Obstacle(depth_map, origin=camera.origin)

environment.add_obstacle(depth_map_obstacle)

After adding the obstacle and planning a trajectory, the environment might have changed, for example, if a box was moved. You can update the depth map in the environment to reflect the new state.

depth_map_obstacle.collision.depths = new_depth_image.to_depth_map().depths

environment.update_depth_map(depth_map_obstacle)

Working with Point Clouds¶

A PointCloud object represents the environment using a set of 3D points.

from jacobi_vision import DepthImage

from jacobi import PointCloud

points = depth_image.to_point_cloud()

# Create a PointCloud object. Resolution is in meters, used for octree construction.

point_cloud = PointCloud(points.tolist(), resolution=0.01)

Create an obstacle from the PointCloud and add it to the environment.

point_cloud_obstacle = Obstacle(point_cloud, origin=Frame()) # world frame

environment.add_obstacle(point_cloud_obstacle)

When you want to remove certain objects from the collision environment (e.g., a box that you want to grasp), you can remove these from the sensor data to prevent the robot from considering them as obstacles. We call this feature point cloud carving.

from jacobi import Box

known_box = Box(x=0.5, y=0.5, z=0.3) # Dimensions in meters

known_obstacle = Obstacle(known_box, origin=Frame(x=0.0, y=0.0, z=0.15))

# Carve the known obstacle from the point cloud

environment.carve_point_cloud(point_cloud_obstacle, known_obstacle)

After carving, you can update the point cloud in the environment.

environment.update_point_cloud(point_cloud_obstacle)

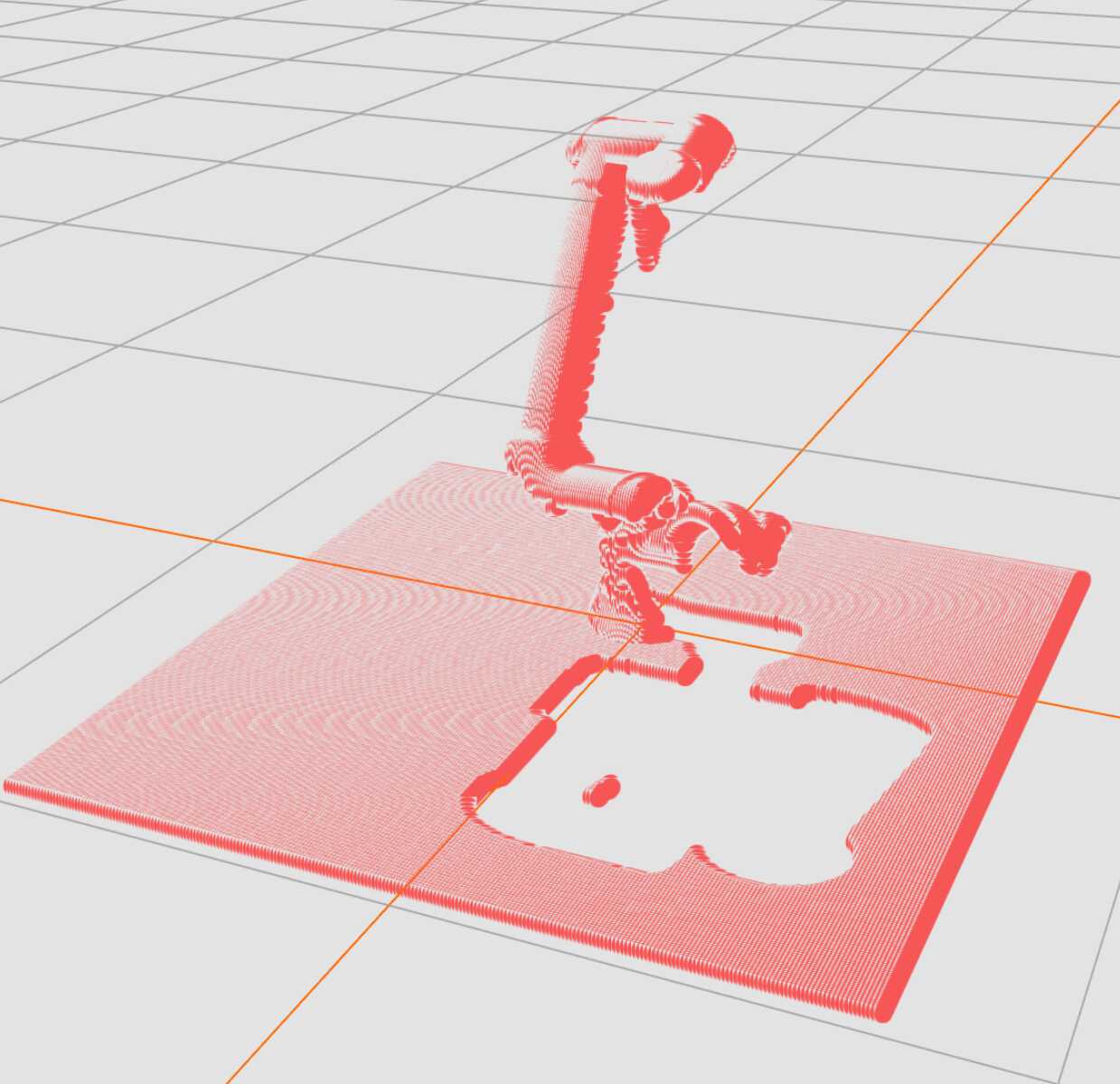

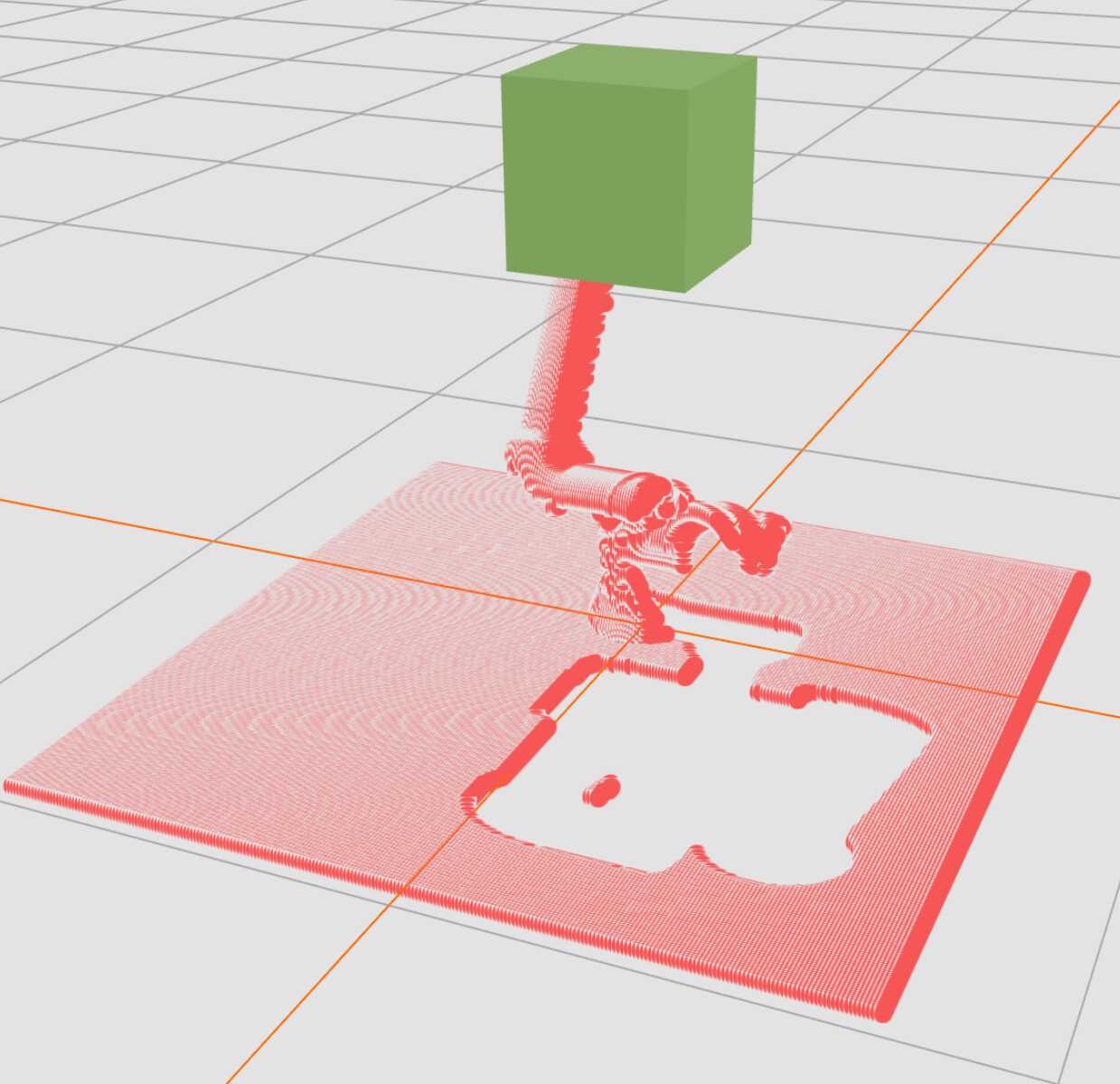

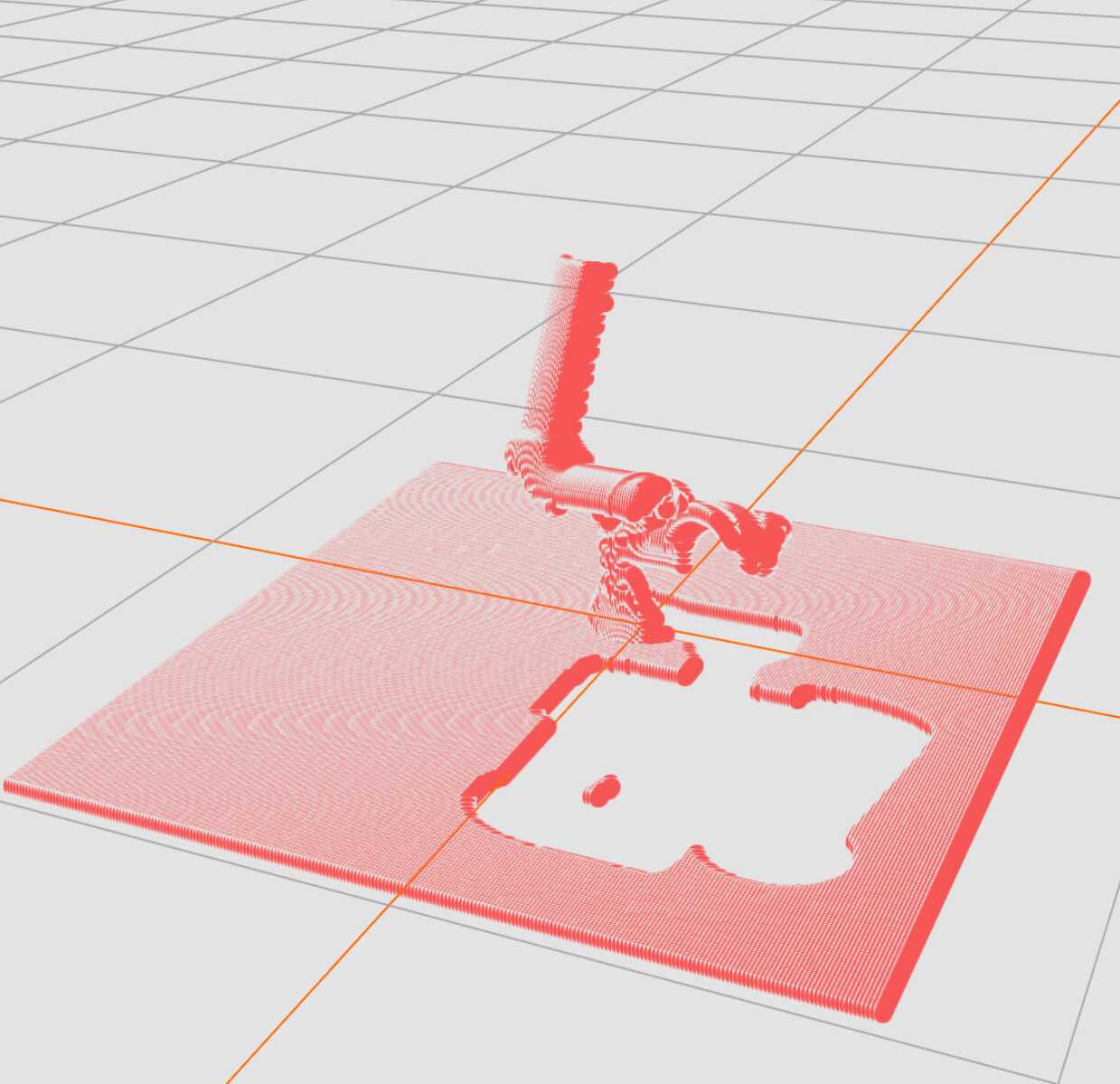

Here is an illustration of the point cloud carving process:

|

|

|

Planning with Sensor Data¶

With the environment set up, including sensor-based obstacles, you can proceed to plan collision-free motions as usual. For in-depth tutorials on motion planning with Jacobi, please refer to the Motion Planning documentation.